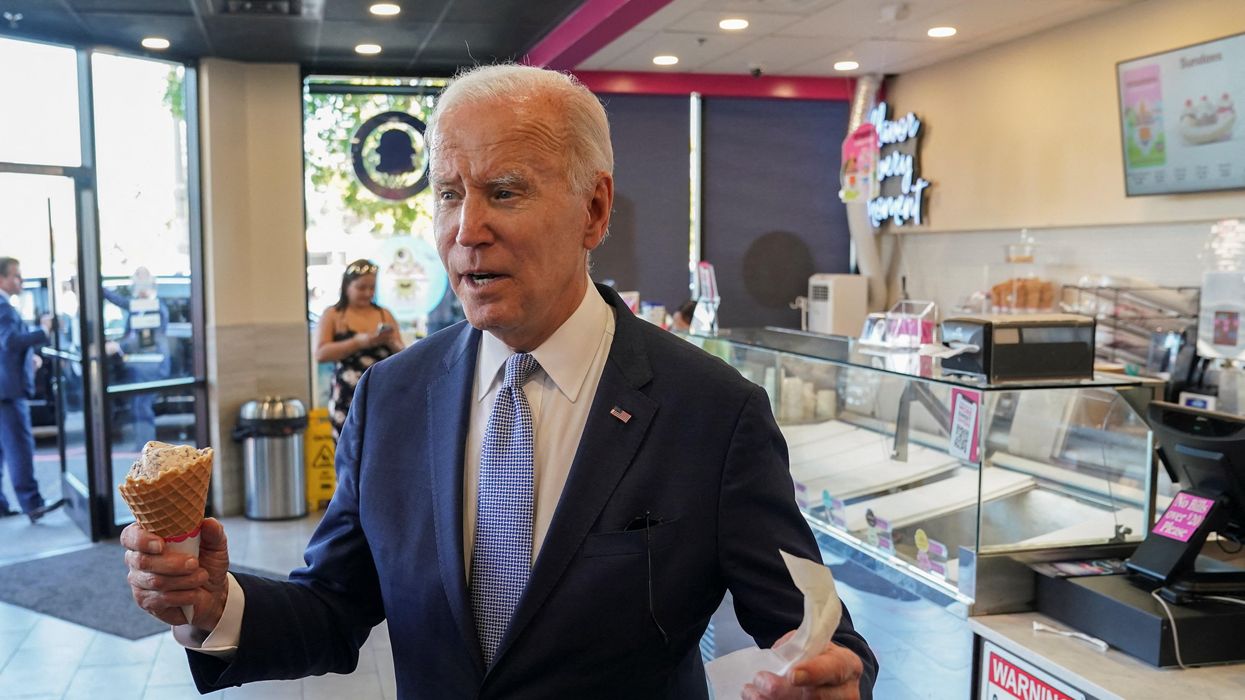

Conspiracy theorists thought President Joe Biden was singing the viral children's song "Baby Shark" in a recent video - but it turned out to be a deepfake.

The footage, which was spread on social media, was met with jokes by people who realised that the video was altered.

However, others seemed convinced that the video was the real deal and were quite angered by it.

One person on Twitter wrote: "Biden thinks the national anthem is baby shark. Are you kidding me[?]."

"[Working people]are struggling rn, Biden. Why don't you just sing baby shark again," another added.

A third wrote: "I don't stand with a bowl of mash potatoes that thinks the national anthem is baby shark you don't speak for me."

Sign upto our free Indy100 weekly newsletter

The video also made its way to platforms like Instagram and TikTok, where people seemingly fell for the faux clip.

Still, clues revealing the video to be fabricated were evident.

For one, a news chyron that was featured in the video claimed to originate from the satirical media outlet The Onion.

However, the outlet's website featured no article or video of Biden singing the children's tune.

So, where did it come from?

The video was initially shared on the YouTube channel known as Synthetic Voices.

The creator, a UK resident named Ali Al-Rikabi, used artificial intelligence to create a dupe of Biden's voice, making him seem as if he was singing.

The video itself was actual footage of the president conducting a speech at Irvine Valley Community College in Irvine, California, on 14 October. It was streamed on C-SPAN at that time.

In conversation with the Daily Dot, Al-Rikabi said that he put the fake Onion chyron on his video to make it evident that it was made up.

Al-Rikabi also shared that he wasn't shocked that people still thought the video was genuine.

"I think that it is a testimony to how far technology in this field has developed...but that's why I leave clues such as the Onion logo," he said.

And when asked why he thinks people believed the video was real, Al-Rikabi told Indy100 that it may boil down to "unfamiliarity" with the technology.

"I think it probably comes down to unfamiliarity with deepfakes or the current audio cloning technology. It looks like some people are not really thinking about what they are viewing or asking any critical questions about the authenticity of it. We all do it to [an] extent," he said.

He added: "We like to scan read rather than read between the lines."

Despite the deepfake creator going out of his way to show that the footage was a joke, it wasn't enough for people on the internet.

With that, the Baby Shark deepfake video shows just how conspiracy theories can still creep into the fabric of society, no matter how fake it appears to others.

Updated on 25 October to include comment from Al-Rikabi

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.