On Thursday morning Donald Trump supporter Ann Coulter sent out a cryptic tweet that was interpreted as a white nationalist motto.

It simply read: 14.

Innocuous, and possibly another example of @edballs, but for many white supremacists it was interpreted as a sign she was conversant in their vocabulary.

Coulter later clarified, claiming she was referring to the fact there were 14 days until as the end of the Obama presidency, when Donald Trump will take the oath of office at noon on 20 January.

The Huffington Post cast doubt on this, when they calculated that the inauguration was "15 days away" on Thursday, not 14.

Nevertheless, why did white white supremacists and white nationalists jump on this?

While Trump was endorsed by the newspaper of the Klu Klux Klan, many of his backers such as Coulter, have protested at being lumped in with the far right movement.

The number "14" among white supremacist circles, is a reference to "Fourteen words", a slogan or mantra of the white supremacist world:

We must secure the existence of our people and a future for white children.

The motto was first attributed to David Lane, a member of terrorist group The Order, but has antecedents in "88 words" from Adolf Hitler's book Mein Kampf.

"14" is used as shorthand, or as a dog whistle, for "white genocide", which was the most popular hashtag used by followers of white supremacist and Neo-Nazi Twitter accounts in 2016.

Dylann Roof and White Supremacists Online

The white nationalist community online, manifested in popular consciousness as "the alt right," came to greater public prominence in 2016.

For redpill Trump fans, message boards such as 4chan and 8chan have been the environments where the far right has organised.

However, social media and the internet has also changed how violent extremists, who do more than troll, organise and receive instruction.

While Islamic extremists and their use of social media had been documented in the last five years, particularly its use by Isis, white nationalist communities are only just becoming the subject of research.

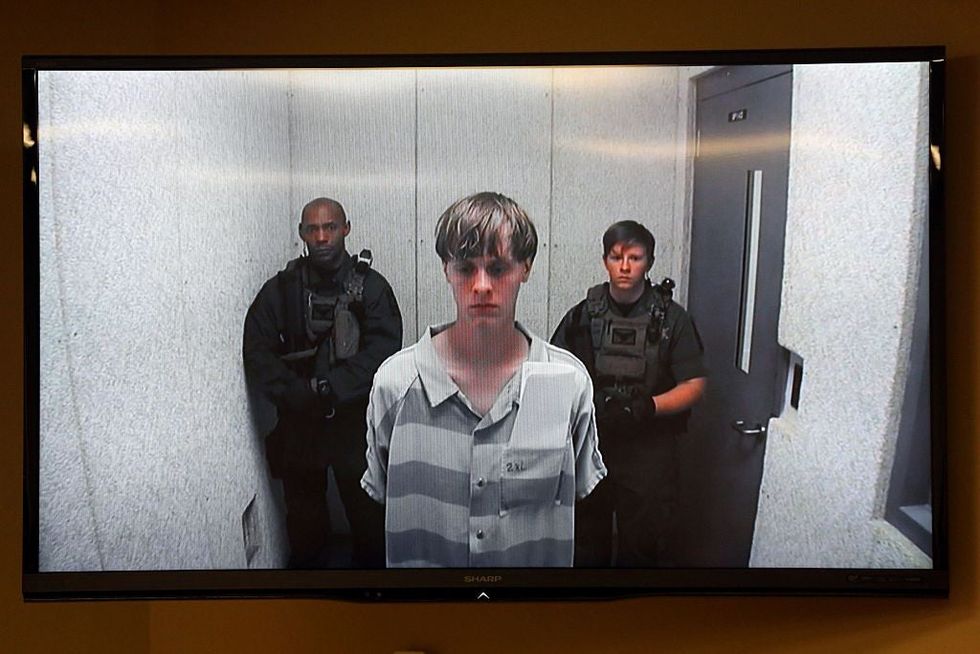

In December 2016, Dylann Roof was found guilty of all charges in the racially motivated killing of nine African-Americans worshipers in a Church in Charleston, South Carolina.

Prosecutors have been making their closing argument this week in their case seeking the death penalty against him.

Contrary to some depictions of Roof as a completely isolated loner - he was, like most of us, computer literate.

Speaking to indy100 in the days following Roof's conviction, Mark Potok a fellow at the Southern Poverty Law Center (SPLC) explained how white nationalists and white supremacists such as Roof use the internet to inform their beliefs and decisions.

In the 1990s it was not possible to recruit people through a computer screen.

At that time there was little evidence of internet based terrorism.

Potok explained that Roof was of a cohort of white nationalists, who got all of their news through the internet.

Previously Klansmen (members of the Klu Klux Klan) had been the most isolated people in American society. According to Potok the web had changed this.

Roof did not watch network news, and his "loner" status kept him physically away from others.

Nor did Roof have contact with anyone in the white supremacist world.

According to Potok, this fact makes Roof an instructive case with regards to how white supremacists and white nationalists use the Internet.

Roof posted under aliases on neo Nazi sites. He made a couple of posts but they weren’t the kind of posts that would get a response.

No dialogue

Despite the lack of dialogue, the internet did play a part in Roof's radicalisation.

It didn't happen through conversations with other nationalists and he wasn't specifically targeted or encouraged by white supremacists - much like how some Isis Twitter accounts lure Americans to join them, through repeated interactions online.

Intentionally non-specific edicts are a tool of white supremacist leaders, and one which played a part in the radicalisation of Roof.

The Council of Conservative Citizens (CCC), whom Potok described as "a direct descendent" of the white citizens' councils of the 1950s, are what has been called "The uptown Klan" - a class distinction that allowed the Mayor and Police Chief of a town to be members of a white nationalist group without being tarred with the label of Klansmen.

In his "manifesto" written in relation to the Charleston shooting, Roof cited the CCC website.

In the days before Dylann Roof's massacre, a statement was posted on the CCCs website, which the SPLC attributes to CCC president Earl Holt III.

ofccprez2015: Old guys like me should dress in a disheveled manner, pretend to be intoxicated, hang-out in ‘the hood,’ and bring along a large-caliber handgun (with backup!) and help mitigate violent black crime at its source.

According to Vocativ, Holt's spokesperson Jared Taylor would neither confirm nor deny Holt had been the person to post that statement.

Potok summarised Holt's words as "Get a gun, and go to the ghetto".

Roof did.

2016 - the year of the social Neo Nazi?

In September 2016, J.M Berger of the Center for Homeland and Cyber Security, a group at George Washington University, produced a comparative study of white nationalists and Isis, specifically how both used Twitter to recruit followers and propagate their messages.

It found that while Isis had originally been heralded as the 'modern' force, utilising social media, their reliance on Twitter as a tool was declining.

This was in stark contrast the number of followers of white nationalist organisations and individuals, which had dramatically increased between 2014 and 2016.

Berger determined a Twitter profile was 'white nationalist' through several manual criteria: The Twitter profiles which were openly so (did not use pseudonyms or anonymity), ones which displayed Nazi or Neo-Nazi imagery (such as the swastika), ones which contained URLs to white nationalist websites in the profile, and ones which were taken from hate group lists compiled by organisations such as the Southern Poverty Law Center.

Berger also found that white supremacist accounts outperformed Isis accounts in almost all current and historic metrics.

- More followers.

- More tweets per day.

- More interactions.

The only metric in which Isis accounts outperformed white nationalist accounts, was in their message discipline.

Isis accounts consistently used the same hashtags and key phrases.

White nationalist accounts survive far longer than Isis accounts.

Even with Twitter's policy, only 288 of the 4000 far right accounts were suspended between their initial collection in April and the policy change in August 2016.

By comparison 1,100 Isis accounts were suspended during and immediately after their collection in Berger's dataset.

Social media savvy

Berger's data also revealed some of the most used profiles tweeted links from white nationalist accounts.

The top link was surprising.

Rather than any particular content (such as the six hour documentary about Adolf Hitler that was also widely shared), the most frequently shared link was for the app Crowdfire.

Crowdfire, along with the second most shared link - Statusbrew, are social media engagement apps.

One of their main features is the mass following of other accounts based on the use of certain keywords.

With this in mind, the far right's dominance of certain parts of the internet, is revealed to be less a reflection of their popularity, than a concerted social media strategy.

The far right is growing their brand, just like an social media marketing executive would.

Loners and Lone Wolfers

As the Roof case demonstrated, the internet is used by white nationalists to encourage 'lone wolfers'.

Those acting alone, without direct instruction, is the culture that white supremacists and white nationalist seek to cultivate.

Decisions by the KKK were made in groups up until 1968, and they changed tack.

Groups lead to talk, conspiracies get out. Better to inculcate a culture of endorsement of actions, and have one way communication.

Paul Jackson a senior lecturer at the University of Nottingham, currently researching Neo-Nazism, told indy100:

I think it is important to distinguish between ‘loners’, who have no two way contact with extreme right groups; ‘lone actors’, who do have an ongoing contact with such groups and whose ideology and activism sustain an individual’s justification for violence, and those who develop into small cells, such as [Timothy] McVeigh.

This presents the question: Were Roof, and actors such as Anders Breivik and Timothy McVeigh "loners"?

Or did his interaction with an online community, unchecked by counter argument, prompt his horrific crimes?

Prevent

While there are strategies in place to combat Islamic extremists, far fewer exist to counter white supremacists.

Paul Jackson, continued:

[The] Prevent Agenda and government policy in general does not do enough to take the far right seriously enough. Basically, more training, resources, monitoring and funded research is needed - though there are also some good examples of good practice under Prevent too.

Moreover, Alex Krasodomski-Jones of think tank DEMOS told indy100 that Isis' threat as a remote command centre is exaggerated:

Regarding the comparison between Islamic Groups and their leadership, I would point out that it is far from certain that some (even high-profile) terrorist incidents have been centrally coordinated in any way... The pattern is often that an attack takes place and then the perpetrator is announced as a 'soldier of the caliphate', but whether they have been instructed to do it isn't clear.

In the battle for the online space there isn't a great deal of structure. In 2014 the IS media channels were the primary sources of IS propaganda but as they have been degraded over the last couple of years, more and more IS content is being produced by users who sympathise but are not being centrally coordinated either.

The far right online appears to be more of an undetectable threat than Isis recruitment, which when it was more potent could be tracked through interactions.

A virtual community with real consequence

Krasodomski-Jones commented on the danger of virtual communities hiding in plain sight.

Regarding membership of extremist groups, I believe the internet has utterly transformed what it means to be part of a group. Party membership is declining all over the place, and it's been replaced by new, digital, informal signifiers of membership. Joining a forum, 'liking' a Facebook page and sharing content from it, buying clothing or fridge magnets, are examples of a new form of political membership made possible by the net.

Policing these "groups" is therefore incredibly difficult, without infringing on the free speech of everybody, just as policing pre-internet groups with no direct interaction was difficult in the past.

What Twitter and social media can do

A spokesperson for Twitter directed indy100 to their formal policies for prohibiting hateful conduct, how they've made reporting hateful speech and aggression more easy.

Twitter's enforcement options when it comes to prohibiting hateful conduct are varied.

Their policy reads:

The consequences for violating our rules vary depending on the severity of the violation and the person’s previous record of violations. For example, we may ask someone to remove the offending tweet before they can tweet again. For other cases, we may suspend an account.

This included policies on automation and spamming, prohibiting hateful conduct, and retraining their staff to respond to this.

Twitter did not answer questions regarding the frequency with which they shut down white nationalist or white supremacist accounts.

Update: Dylann Roof was sentenced to death on 10 January 2017.

More: This academic spent three years studying the EDL. This is what she discovered

More: Police officers high five in video footage of Dylann Roof arrest