Becca Monaghan

Dec 18, 2024

ChatGPT refuses to say one specific name – and people are worried

The Independent/ Lumen5

Artificial intelligence has evolved from a thing of science fiction to a tangible part of society. While some AI tools can be hugely beneficial for some, they also raise major ethical concerns – whether it be through deepfakes sharing misinformation, or the creation of unsolicited NSFW content without consent.

Now, AI is entering the realm of mental health care.

With the ever-growing demand for affordable and accessible therapy, many are leaning on AI-powered tools (such as ChatGPT) for support, offering an alternative route to traditional therapy sessions with qualified professionals.

These tools provide emotional support, mental health coaching and even cognitive behavioural therapy (CBT) – all through a screen. People have candidly turned to TikTok to share their mental health journeys with the help of AI, which highlights the global shortage of mental health professionals and the difficulty of accessing therapy for underserved populations.

@fluentlyforward How i use #chatgpt for makeshift #therapy or a way to understand my feelings

While AI therapy holds significant potential as a solution for some of life's milder problems – for example, breakup advice or days where you feel slightly burned out – it poses crucial questions about the limits of technology in understanding and treating the human mind, especially in times of crisis.

Professional therapy stands apart from AI tools in its depth of training, personalised care, and therapeutic relationships.

Dr Robin Lawrence, psychotherapist and counsellor at 96 Harley Street with over 30 years of experience told Indy100: "A professional concentrates solely on the person seeking support or advice... they explore the situation and circumstances of the problem with the client."

This allows therapists to provide an objective perspective, which can be crucial in resolving issues – something AI is not equipped to do.

Dr Lucy Viney, clinical psychologist and co-founder of The Fitzrovia Psychology Clinic, adds that professionals are highly trained, explaining, "In the UK, a clinical psychologist has completed an undergraduate degree in psychology and a Doctorate in clinical psychology," ensuring a high level of expertise and professionalism.

She emphasises that clinical psychologists are "trained in a variety of psychological treatments... and can draw on multiple models to tailor therapy to suit your specific needs".

This flexibility and evidence-based approach are areas where AI falls short, as Dr Viney points out, explaining that AI tools provide only "generalised information, symptom monitoring and feedback" but cannot offer personalised care. She also stresses the importance of the human connection in therapy, saying, "A strong therapeutic relationship has been proven to be a significant predictor in positive therapeutic outcomes," a connection that AI cannot replicate.

While AI tools can provide general support, they ultimately lack the tailored, emotionally intelligent care that professional therapy offers.

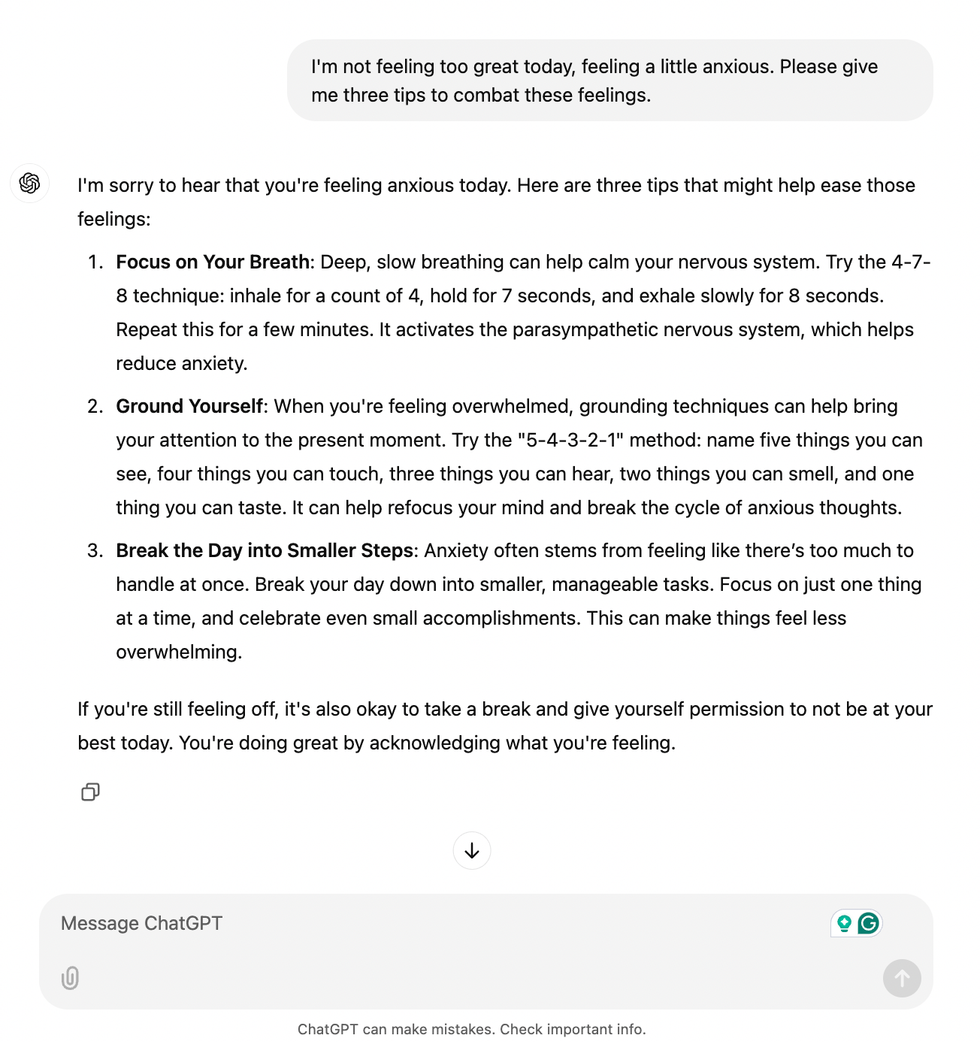

I decided to put ChatGPT’s advice to the test, and it didn’t take long to see why so many people are turning to AI tools for support during stressful times.

When I asked for three ways to combat anxious feelings, I received a thoughtful response: breathwork exercises, grounding techniques, and advice on breaking the day into manageable segments to prevent feeling overwhelmed.

What struck me most was how closely these suggestions mirrored the strategies I’ve learned in my own therapy sessions with a qualified professional – effective, practical, and surprisingly similar to some of the expert guidance I've been told in the past.

Both Dr Lawrence and Dr Viney acknowledge that AI tools may have some potential benefits in mental health care, though they emphasise the importance of always getting professional help.

Dr Lawrence believes that AI may help clients recognise their dilemmas, stating: "Possibly, with AI, by speaking aloud in response to questions, it may be that the client starts to recognise the dilemma."

However, he stresses that AI cannot replicate the nuanced, emotionally attuned relationship between a client and a professional, highlighting that a real therapist "picks up on keywords, past conversations, expressions, hesitation and so much more besides".

He cited a personal experience of a mother with post-natal depression who was given CBT via a computer. When the machine responded with a mechanical, "I’m sorry to hear that," the woman ended the session, illustrating the irreplaceable role of "human empathy and careful listening".

On the other hand, Dr Viney points out that AI tools can increase accessibility for people who might face barriers to therapy.

"They can help individuals who may have concerns around anonymity and reduce stigma," she says, adding it can offer continuity and long-term support. However, Dr Viney agrees with Dr Lawrence’s view that AI should "augment, rather than replace, professional services."

Despite this, there are significant risks that come with relying solely on AI for mental health support, particularly in severe cases of crisis.

Dr Lawrence underlines that "AI is not real. It does not have the emotional intelligence of a human," and argues that vulnerable individuals should not be forced into relying on AI due to cost constraints. He warns that in the worst-case scenario, a client may feel "no better than when they started," and in more severe cases, the consequences could be tragic, with a client potentially "taking their own life."

The absence of human empathy in AI, he suggests, makes it ill-suited for dealing with critical mental health situations.

Dr Viney, while acknowledging the potential for AI to increase accessibility, particularly for individuals facing barriers to therapy, also cautions about the risks of algorithmic bias.

She explains that "bias in the data used to train the chatbot could lead to... inaccurate or even harmful advice," which could perpetuate systemic biases or inadvertently discriminate against certain populations.

According to Dr Viney, these biases could exploit vulnerable groups who turn to AI due to "limited access to mental health services or other social determinants of health."

Not to mention, the privacy and data security concerns when turning to AI.

Dr Lawrence stresses to Indy100 that it's important to ensure that personal data is handled securely, explaining that "a potential user would need to ensure there was written understanding that their details would not be shared unless explicit permission was given."

Similarly, Dr Viney points out that engaging with AI-based platforms for mental health support means users inevitably share sensitive information, making them vulnerable to potential breaches of confidentiality. She suggests that privacy is compromised in AI interactions, particularly with systems like ChatGPT, which lack "therapist disclosure" and "simulated empathy," both of which can challenge user rights and impact therapeutic outcomes.

From a technical perspective, Kirill Stashevsky, ITRex CTO & Co-Founder tells Indy100 that while AI can vastly improve access to therapy, it also presents serious risks related to data ownership, security and misuse.

"If AI systems collect, process, or store this information, there is a risk of security breaches or unauthorised access," the tech expert told Indy100, referring to a cyberattack on Confidant Health, which exposed sensitive data from over 120,000 users.

Another concern is the unclear ownership of data – whether it belongs to the user or the company behind the AI service. This ambiguity can lead to exploitation.

Even if data is anonymised, "advanced AI and data analysis techniques may be able to re-identify individuals," raising additional privacy concerns.

Stashevsky also points to the challenge of accountability, noting that if an AI system provides harmful advice or fails to detect critical issues like suicidal ideation, determining responsibility can be difficult.

If you or anyone you know is dealing with mental health issues, both Dr Lawrence and Dr Viney encourage people to reach out for professional help.

They suggest starting with your GP, who can help assess your situation and refer you to the right kind of therapy, whether that's through a therapist they work with or local mental health charities. The British Association for Counsellors and Psychotherapy (BACP) also contains a list of qualified professionals.

While AI tools may seem like a quick fix, both experts agree that when it comes to mental health, nothing beats the support and guidance of a real person who truly understands your needs.

Indy100 reached out to ChatGPT for comment.

If you are experiencing feelings of distress, or are struggling to cope, you can speak to the Samaritans, in confidence, on 116 123 (UK and ROI), email jo@samaritans.org, or visit the Samaritans website to find details of your nearest branch.

If you are based in the USA, and you or someone you know needs mental health assistance right now, call or text 988, or visit 988lifeline.org to access online chat from the 988 Suicide and Crisis Lifeline. This is a free, confidential crisis hotline that is available to everyone 24 hours a day, seven days a week.

If you are in another country, you can go to www.befrienders.org to find a helpline near you

How to join the indy100's free WhatsApp channel

Sign up for our free Indy100 weekly newsletter

Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

Top 100

The Conversation (0)